Jetson Nano ROS Vision AI Part 3

Photo by Hunter Harritt on Unsplash

Live Video Inferencing Part 3 DetectNet⌗

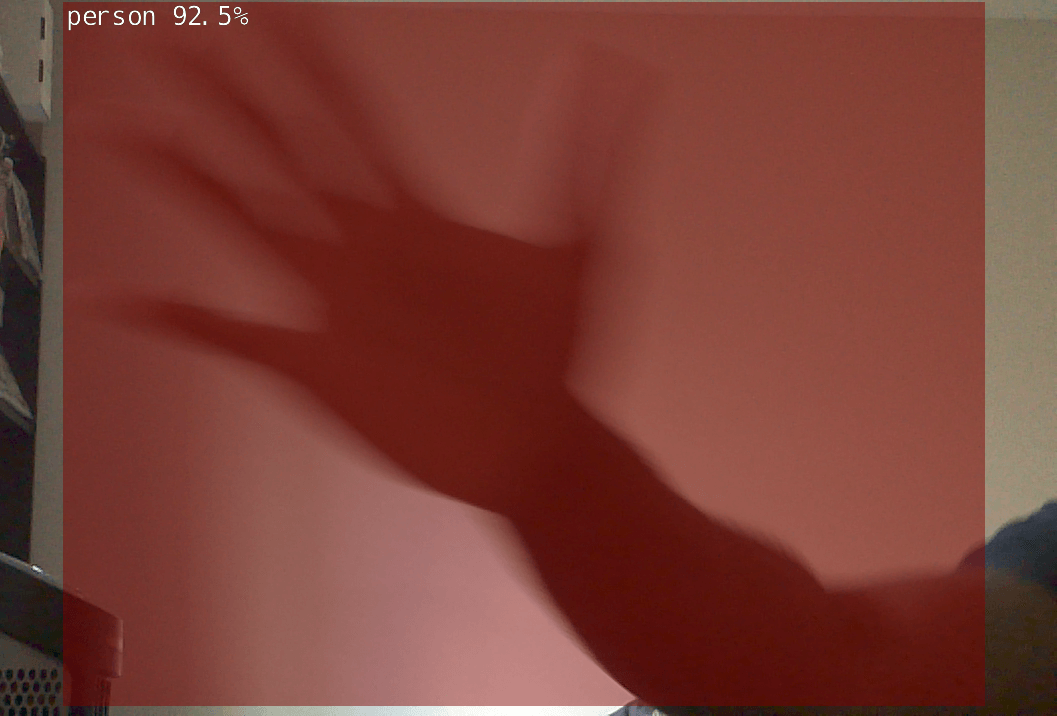

Our Goal: to create a ROS node that receives raspberry Pi CSI camera images, runs Object Detection and outputs the result as a message that we can view using rqt_image_view.

Object Detection⌗

We will be generating bounding boxes around objects detected in the image. Unlike image classification, the model can detect multilpe objects per image.

Pre-requisites⌗

Completed the previous post here

Live Video Inferencing (DetectNet)⌗

If you have completed the previous tutorial then this code will look very similar.

First lets put down some detectnet node code.

Create a file named node_imagetaker_detectnet.cpp in your <catkin-workspace-location>/<package-name>/src/ directory

And add the following to it, we will describe what each portion of the code does

Let’s add our needed imports

#include <iostream>

#include <ctime>

#include <sstream>

#include <ros/ros.h>

#include <image_transport/image_transport.h>

#include <cv_bridge/cv_bridge.h>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgcodecs.hpp>

#include <sensor_msgs/image_encodings.h>

#include <sensor_msgs/Image.h>

// the API to our segmentation model

#include <jetson-inference/detectNet.h>

// allows us to use the gpu for image operations

#include <jetson-utils/cudaMappedMemory.h>

#include <jetson-utils/loadImage.h>

#include "image_converter.h"

#include <unordered_map>

using namespace cv;

using namespace std;

- add our globals

// globals

detectNet* net = NULL;

// image converter object

imageConverter* cvt = NULL;

// where we will publish our results too

ros::Publisher* result_pub = NULL;

- Add our image callback that will be called when we receive a message from the camera publisher.

- This is where the image will be passed into the AI model for inferencing. We will also overlay the resulting bounding box here.

// input image subscriber callback

void img_callback( const sensor_msgs::ImageConstPtr& input )

{

ROS_INFO ("Received Image");

// convert sensor_msgs[rgb] to opencv[brg]

cv_bridge::CvImagePtr cv_ptr;

cv_bridge::CvImagePtr cv_ptr_flip; // pointer for flipped image

try

{

cv_ptr = cv_bridge::toCvCopy(input, sensor_msgs::image_encodings::BGR8);

cv_ptr_flip = cv_bridge::toCvCopy(input, sensor_msgs::image_encodings::BGR8);

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

// we are doing a 180 deg flip since

// my camera is upside down

const int img_flip_mode_ = -1;

// flip the image

cv::flip(cv_ptr->image, cv_ptr_flip->image, img_flip_mode_);

// convert converted image back to a sensor_msgs::ImagePtr

// for use with nvidia / other ML algorithms

sensor_msgs::ImagePtr flippedImage = cv_ptr_flip->toImageMsg();

// convert the image TO reside on GPU

// the converting TO and converting FROM are the SAME funtion name

// with different signatures

if( !cvt || !cvt->Convert(flippedImage) )

{

ROS_ERROR (

"failed to convert %ux%u %s image",

flippedImage->width,

flippedImage->height,

flippedImage->encoding.c_str()

);

return;

}

else {

ROS_INFO (

"Converted %ux%u %s image",

flippedImage->width,

flippedImage->height,

flippedImage->encoding.c_str()

);

}

// classify the image

detectNet::Detection* detections = NULL;

/*

enum OverlayFlags

{

OVERLAY_NONE = 0, // < No overlay.

OVERLAY_BOX = (1 << 0), //< Overlay the object bounding boxes

OVERLAY_LABEL = (1 << 1), //< Overlay the class description labels

OVERLAY_CONFIDENCE = (1 << 2), //< Overlay the detection confidence values

};

*/

// detect AND render the all possible info i.e

// bounding box, label, confidence

const uint32_t flags = detectNet::OVERLAY_BOX | detectNet::OVERLAY_LABEL | detectNet::OVERLAY_CONFIDENCE;

const int numDetections = net->Detect(

cvt->ImageGPU(),

cvt->GetWidth(),

cvt->GetHeight(),

&detections,

flags

);

// if an error occured

if( numDetections < 0 )

{

ROS_ERROR(

"failed to run object detection on %ux%u image",

flippedImage->width,

flippedImage->height

);

return;

}

/*

// if no detections

if( numDetections == 0 )

{

ROS_INFO(

"detected %i objects in %ux%u image",

numDetections,

flippedImage->width,

flippedImage->height

);

return;

}

*/

// if objects were detected, send out message

if( numDetections >= 0 )

{

ROS_INFO(

"detected %i objects in %ux%u image",

numDetections,

flippedImage->width,

flippedImage->height

);

// get our image with overlays back from the GPU

// the converting TO and converting FROM are the SAME funtion name

// with different signatures

sensor_msgs::Image outputImage;

// outputImage.header.stamp = ros::Time::now();

if(!cvt || !cvt->Convert(outputImage, sensor_msgs::image_encodings::BGR8)) {

ROS_ERROR ("failed to convert outputImage image back");

return;

}

else {

ROS_INFO (

"Converted %ux%u %s image back",

outputImage.width,

outputImage.height,

outputImage.encoding.c_str()

);

}

// save the output image to png

sensor_msgs::ImagePtr out_img_ptr;

cv_bridge::CvImagePtr out_cv_img_ptr;

try

{

out_img_ptr = boost::make_shared<sensor_msgs::Image>(outputImage);

out_cv_img_ptr = cv_bridge::toCvCopy(

out_img_ptr,

sensor_msgs::image_encodings::BGR8

);

// publish image result

result_pub->publish(outputImage);

// save the image with the bounding box

// saveImage(&out_cv_img_ptr->image);

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

}

}

- Finally our main function where we define our subscribers and publishers and initialize our node

int main (int argc, char **argv) {

ros::init(argc, argv, "imagetaker_detectnet");

ros::NodeHandle nh;

ros::NodeHandle private_nh("~");

std::string class_labels_path;

std::string prototxt_path;

std::string model_path;

// can change this to another model you have

// e.g rosrun <package-name> imagetaker_detectnet _model_name:=pednet

// "ssd-mobilenet-v2" is better than "pednet"!

std::string model_name = "ssd-mobilenet-v2";

float mean_pixel = 0.0f;

// If the desired objects aren't being detected in the video feed or

// you're getting spurious detections, try decreasing or increasing

// the detection threshold (default value is 0.5)

float threshold = 0.5f;

private_nh.param<std::string>(

"model_name",

model_name,

"ssd-mobilenet-v2"

);

// determine which built-in model was requested

detectNet::NetworkType model = detectNet::NetworkTypeFromStr(model_name.c_str());

if( model == detectNet::CUSTOM )

{

ROS_ERROR(

"invalid built-in pretrained model name '%s', defaulting to pednet",

model_name.c_str()

);

model = detectNet::PEDNET;

}

// create network using the built-in model

net = detectNet::Create(model);

if( !net )

{

ROS_ERROR("failed to load detectNet model");

return 0;

}

/*

* create the class labels parameter vector

*/

std::hash<std::string> model_hasher; // hash the model path to avoid collisions on the param server

std::string model_hash_str = std::string(

net->GetModelPath()) + std::string(net->GetClassPath()

);

const size_t model_hash = model_hasher(model_hash_str);

ROS_INFO("model hash => %zu", model_hash);

ROS_INFO("hash string => %s", model_hash_str.c_str());

// obtain the list of class descriptions

std::vector<std::string> class_descriptions;

const uint32_t num_classes = net->GetNumClasses();

for( uint32_t n=0; n < num_classes; n++ ) {

class_descriptions.push_back(net->GetClassDesc(n));

}

// create the key on the param server

std::string class_key = std::string("class_labels_") + std::to_string(model_hash);

// private_nh.setParam(class_key, class_descriptions);

// populate the vision info msg

std::string node_namespace = private_nh.getNamespace();

ROS_INFO("node namespace => %s", node_namespace.c_str());

/*

* create an image converter object

*/

cvt = new imageConverter();

if(!cvt)

{

ROS_ERROR("failed to create imageConverter object");

return 0;

}

/*

* advertise publisher topics

*/

ros::Publisher result_publsh = private_nh.advertise<sensor_msgs::Image>("image_detections", 2);

result_pub = &result_publsh;

/*

* subscribe to image topic

*/

ros::Subscriber img_sub = nh.subscribe(

"/csi_cam_0/image_raw",

5,

img_callback

);

/*

* wait for messages

*/

ROS_INFO("imagetaker node initialized, waiting for messages");

ros::spin();

return 0;

}

Full code looks like (here)[https://github.com/neil-okikiolu/jetson-nano-ros-vision-ai/blob/master/src/node_imagetaker_detectnet.cpp]

Then add the following to your <catkin-workspace-loaction>/<package-name>/CMakeLists.txt

add_executable(imagetaker_detectnet

src/node_imagetaker_detectnet.cpp

src/image_converter.cpp

)

target_link_libraries(imagetaker_detectnet ${catkin_LIBRARIES} jetson-inference)

Verify⌗

Run catkin_make to compile your code and make sure no errors show up

If any errors show up, make a comment and I will try to help you with it

In a terminal run

roscore

In another terminal run

# we are running at 1280x720 @ 24 FPS for now

roslaunch jetson_csi_cam jetson_csi_cam.launch sensor_id:=0 width:=1280 height:=720 fps:=24

# if your camera is in csi port 1 change sensor_id to 1

- NOTE: you may see a warning about a camera calibration file, you can ignore it

In a third terminal run

# to check if we are getting images from the camera

rostopic list

# you should see /csi_cam_0/camera_info or /csi_cam_1/camera_info

rostopic hz /csi_cam_<0 or 1>/camera_info

You should see something like:

average rate: 1.937

min: 0.516s max: 0.516s std dev: 0.00000s window: 2

average rate: 1.938

min: 0.515s max: 0.516s std dev: 0.00048s window: 4

average rate: 1.937

min: 0.515s max: 0.517s std dev: 0.00056s window: 6

average rate: 1.937

min: 0.515s max: 0.517s std dev: 0.00048s window: 8

average rate: 1.937

min: 0.515s max: 0.517s std dev: 0.00047s window: 10

average rate: 1.937

min: 0.515s max: 0.517s std dev: 0.00045s window: 12

average rate: 1.937

min: 0.515s max: 0.517s std dev: 0.00053s window: 14

...

In the third terminal Ctrl^c to stop the rostopic hz, now we will run our ROS node.

To specify a model, uncomment the string name for the model you want in the main() funtion

# specified model

rosrun <package-name> imagetaker_detectnet

In rqt_image_view click the drop down and select either imagetaker_detectnet/image_detections to see the image segmentation results in real-time!

This was long, thanks for staying till this point. Come back later for more image inferencing

If you have any tips or questions feel free to leave a comment!

Now we have learned how to use the pre made model classes (segnet, imagenet, detectnet)

Next time we will actually make a our own custom C++ model class for poseNet (Pose detection).

We will do a deep dive of Nvidia’s Tensor RT and Image Processing with CUDA.

See you then!

Notes⌗

- the first time you run the node, it has to optimize the model in Tensor RT so it will go on for a couple of minutes

- just Wait

- on your next run, the model is cached and it will not take long to start up

- use

rqt_image_viewto recieve images in real time- if you are running the nano remotely, use SSH with the

-Xflag for forwarding the UI - e.g

ssh -X mynano@192.123.456.789

- if you are running the nano remotely, use SSH with the

Links to source code⌗

Full source code available on github